NASA's Interstellar Probe reaches 1,000 AU

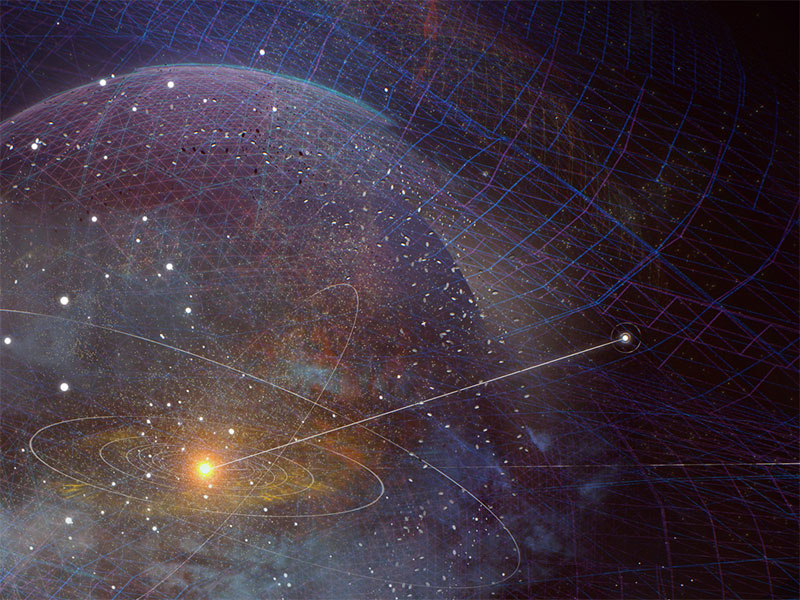

The Interstellar Probe (IP) is a very long-term and long-range space mission, designed by the Johns Hopkins University Applied Physics Laboratory (APL) and funded by NASA, to explore beyond the edge of the Solar System further than any previous spacecraft. Launched in the early 2030s, it travels to a target destination of 1,000 astronomical units (AU), or 1,000 times the distance from the Sun to Earth.

Five earlier spacecraft had passed through the heliopause – an invisible boundary where the Sun's solar wind is stopped by the interstellar medium, because the solar wind is no longer strong enough to push back the stellar winds of surrounding stars. These were Voyager I (2012), Voyager II (2018), Pioneer 11 (2027), New Horizons (2043) and Pioneer 10 (2057).

The Interstellar Probe, however, is designed to go much further than ever before. One of its main objectives is the obtaining of an updated "Pale Blue Dot" image (first made famous by Voyager I in 1990), this time from a vantage point almost 25 times more distant. In other words, it aims to capture a photo looking back at Earth and the entire contents of the heliosphere while located at 150 billion km (93 billion miles) from the Sun. This is 0.02 light years, or about 5.8 light days and roughly halfway to the inner edge of the Oort cloud.

The mission is launched by a powerful new rocket, NASA's Space Launch System (SLS), which helps generate the velocity needed to cross the Solar System in record time. The nuclear-powered probe swings by Jupiter for a gravity assist and further speed boost. It reaches the heliopause in just 15 years, covering 8 AU per year, more than twice as fast as the earlier Voyager probes. It then continues onward into deep space, remaining operational for another 35 years, with its final transmissions received at ~1,000 AU. Along the way, objects in the Kuiper Belt and beyond are looked for, including rogue planets, while dust distributions are studied to provide constraints on the total number of bodies in this remote region.

In addition to capturing imagery of the distant Earth and other points of interest, the IP determines the size and shape of the heliosphere "bubble" surrounding our Solar System and confirms the density of atoms per cubic metre at progressively further locations. This large-scale model of the heliosphere can be extrapolated to other star systems, revealing new knowledge of stellar dynamics, showing how our own heliosphere fits in the family of other astrospheres and providing new clues about the habitability of exoplanets.

The IP becomes the first NASA mission to characterise, in detail, the local interstellar medium (LISM) lying beyond the heliosphere. Earlier studies had hinted at not one, but possibly four different interstellar clouds in contact with our heliosphere. A more detailed picture of our galactic neighbourhood and how it shapes our heliosphere is formed by data from the IP. This determines whether our Sun is entering a new region of interstellar space with drastically different properties.

Credit: Johns Hopkins APL

2080

Some humans are becoming more non-biological than biological

Today, the average citizen has access to a wide array of biotechnology implants and personal medical devices. These include fully artificial organs that never fail, bionic eyes and ears providing Superman-like senses, nanoscale brain interfaces to augment the wearer's intelligence, synthetic blood and bodily fluids that can filter deadly toxins and provide hours' worth of oxygen in a single breath.

Some of the more adventurous citizens are undergoing voluntary amputations to gain prosthetic arms and legs, boosting strength and endurance by orders of magnitude. There is even artificial skin based on nanotechnology, which can be used to give the appearance of natural skin when applied to metallic limbs.

These various upgrades have become available in a series of gradual, incremental steps over preceding decades, such that today, they are pretty much taken for granted. They are now utilised by a wide sector of society – with even those in developing countries now having access to some of the available upgrades due to exponential trends in price performance.

Were a fully upgraded person of the 2080s to travel back in time a century and be integrated into the population, they would be superior in almost every way imaginable. They could run faster and for longer distances than the greatest athletes of the time; they could survive multiple gunshot wounds; they could cope with some of the most hostile environments on Earth without too much trouble. Intellectually, they would be considered geniuses – thanks to various devices merged directly with their brain.

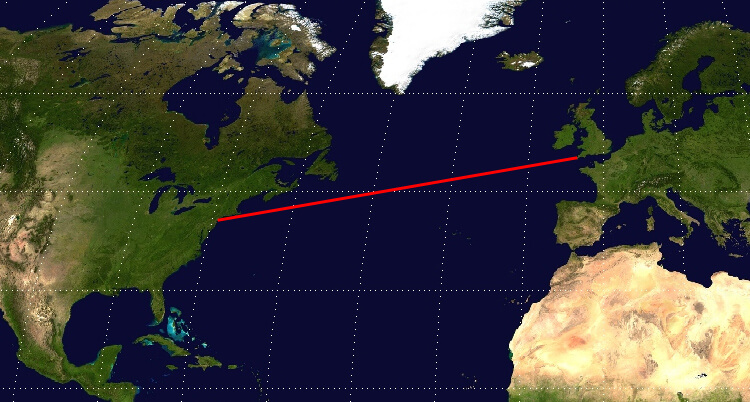

Construction of a transatlantic tunnel is underway

Built from advanced automation and robots – and controlled by AI – this is among the largest, most ambitious engineering projects ever undertaken. With hyperfast Maglev up to 4,000mph, passengers using the tunnel can be delivered from Europe to America in under an hour.

Carbon nanotubes, along with powerful geo-sensing devices, have been paramount in the structure's design – these can self-adjust in the event of undersea earthquakes, for example. Also noteworthy is that the train cars operate in a complete vacuum. This eliminates air friction, allowing hypersonic speeds to be reached. The cost of this project is in the region of $88-175bn.

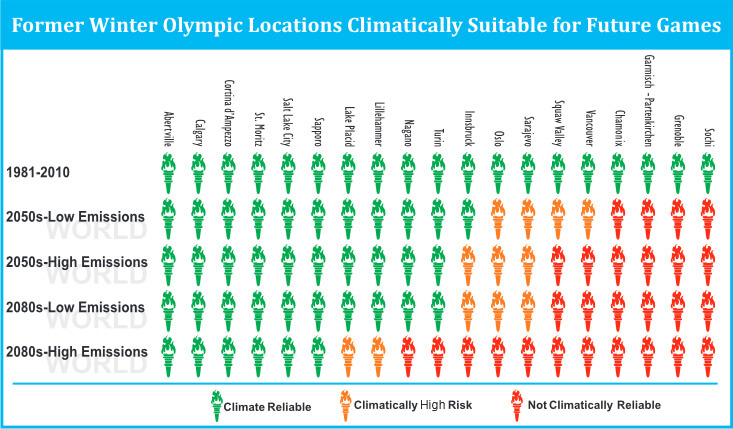

Many former Winter Olympics venues no longer provide snow

Rising temperatures have rendered many former Winter Olympic sites "climatically unreliable" – that is to say, unable to provide snow on a regular basis.* Although geoengineering efforts have been underway for some time, these have not yet managed to stabilise the global climate.* Former locations that are now either unsuitable or forced to rely on artificial snow include Sochi (Russia), Grenoble (France), Garmisch-Partenkirchen (Germany), Chamonix (France), Vancouver (Canada) and Squaw Valley (US), with a number of others remaining at high risk. Aside from the Olympics, winter sports in general are increasingly being moved indoors, or are taking place in simulated environments.

Polar bears face extinction

Between 2000 and 2050, polar bear numbers dropped by 70 percent, due to shrinking ice sheets caused by global warming. By 2080, they have disappeared from Greenland entirely – and from the northern Canadian coast – leaving only dwindling numbers in the interior Arctic archipelago.*

Of the few which remain, ice breaking up earlier in the year means they are forced ashore before they have time to build up sufficient fat stores. Others are forced to swim huge distances, which exhausts them, leading to drowning. The effects of global warming have led to thinner, stressed bears, decreased reproduction, and lower juvenile survival rates.

One in five lizard species are extinct

The ongoing mass extinction has claimed many exotic and well-known lizards.* One in five species are now extinct as a result of global warming. Lizards are forced to spend more and more time resting and regulating their body temperature, which leaves them unable to spend sufficient time foraging for food.

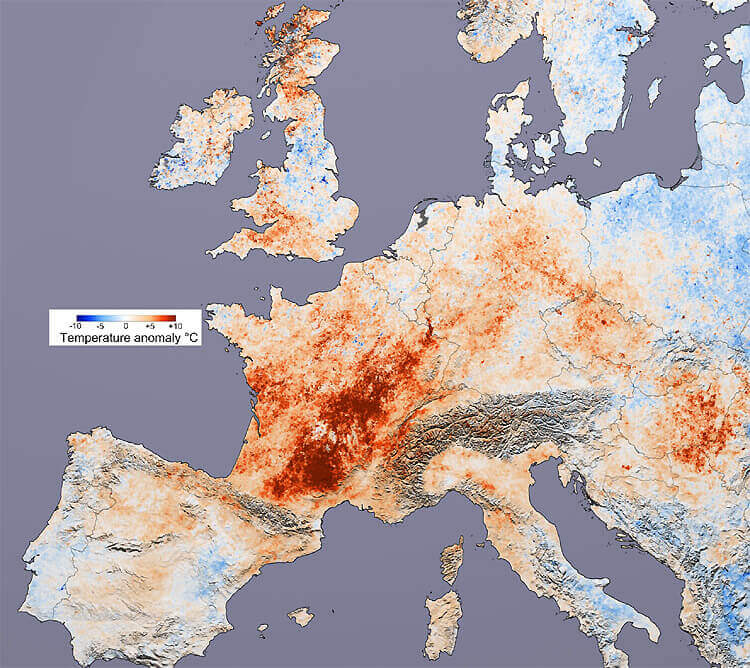

Deadly heatwaves plague Europe

Heatwaves greater than that seen in 2003 have become annual occurrences by this time.* In the peak of summer, temperatures in major cities such as London and Paris reach over 40°C. In some of the more southerly parts of the continent, temperatures of over 50°C are reported. Thousands are dying of heat exhaustion. Forest mega-fires rage in many places* while prolonged, ongoing droughts are causing many rivers to run permanently dry. Spain, Italy and the Balkans are turning into desert nations, with climates similar to North Africa.

Credit: NASA

2083

V Sagittae becomes the brightest star in the night sky

In 2083, a previously faint star system known as V Sagittae erupts in a spectacular nova outburst, becoming the brightest star in the night sky.* V Sagittae is a cataclysmic variable binary, located approximately 7,800 light years from Earth. During earlier observations, it was found to consist of a main sequence star of about 3.3 solar masses and a white dwarf of about 0.9 solar masses, orbiting each other every 0.5 days. At such close range, material from the larger star accreted onto the white dwarf at an exponentially increasing rate.

From 1890 to 2020, the pair brightened by a factor of 10 and continued to gain in magnitude throughout subsequent decades. Astronomers considered the difference in mass between the two as highly unusual – in all other cataclysmic variables (CVs), the white dwarf was more massive. This made V Sagittae the most extreme of all known CV systems, about 100 times more luminous than normal and with a powerful stellar wind equal to the most massive stars prior to their deaths.

Observed to be in the late stages of an in-spiral, the two would ultimately collide and coalesce, creating a powerful burst of light. This occurs in 2083 and results in a tremendous release of gravitational potential energy, driving a stellar wind as never before seen, and raising the system luminosity to near that of a supernova at its peak. This explosive event lasts for more than a month, as the objects merge into one star, during which time V Sagittae outshines even Venus and Sirius. The merger creates a degenerate white dwarf core, and a hydrogen-burning layer, surrounded by a vast gas envelope consisting mostly of hydrogen – eventually becoming a red giant.

Credit: Bob King, Sky & Telescope

2084

Conventional meat is becoming obsolete

For many thousands of years, humans had practiced animal husbandry. This began during the Neolithic revolution from around 13,000 BC, when animals were first domesticated, marking a transition from hunter-gatherer communities to agriculture and settlement. By the time of early civilisations such as ancient Egypt, various animals were being raised on farms including cattle, sheep, goats and pigs.

The 15th and 16th centuries witnessed the "Columbian Exchange", when Old World crops and livestock were brought to the New World. Other historical developments included the British Agricultural Revolution, or Second Agricultural Revolution, which saw an unprecedented increase in labour and land productivity in Britain between the mid-17th and late 19th centuries, with livestock breeds improved to yield more meat, milk, and wool.

The so-called Green Revolution, or Third Agricultural Revolution, occurred in the mid-to-late 20th century. This boosted agricultural production worldwide – particularly in developing regions – through a series of technology transfer initiatives. An expansion of irrigation infrastructure, chemical fertilizers, pesticides, mechanisation, better land management and other modernisation techniques allowed the production of new and higher-yielding varieties of wheat, rice, maize and other food grains. The resulting increase in harvests led to a major improvement in available food supplies for both humans and as livestock feed.

In the early 21st century, however, the global agricultural system faced new and profound challenges. Demand for meat products had soared, led by Asia with its vast populations and rapidly rising incomes. Due to urban expansion and other environmental pressures, arable land was on course to decline from 0.38 hectares per capita in 1970, to a projected 0.15 hectares per capita by 2050. Topsoil had suffered immense damage, with tens of billions of tons being lost through intensive farming each year, one-third having been eroded since the Industrial Revolution, and was forecast to completely disappear by 2075. Fresh water had become increasingly scarce, while nitrogen and other agricultural runoffs polluted the world's rivers, lakes, seas and oceans. In addition to all this, global reserves of phosphorus, essential for many agricultural systems, appeared to be reaching a peak, with most of the remaining supplies confined to just four countries: Morocco, China, Algeria and Syria.

Another major issue to emerge around this time was antibiotic resistance. In the past, antibiotics were routinely added to certain compound foodstuffs in order to maximise livestock health and growth, but this practice was increasingly frowned upon in many countries due to the risk of drug-resistant bacteria. By 2020, approximately 700,000 people were dying each year from such infections, with 60% of these diseases originating from animals. This figure was on track to reach 10 million per year by 2050, becoming a bigger killer than cancer.

Public attitudes towards meat in general were shifting. The cultural zeitgeist was gradually moving away from traditional animal slaughter and in favour of new, alternative ways to produce meat. The climate crisis, resulting in part from the livestock industry (accounting for 15% of greenhouse gas emissions), gave added momentum to the need for change.

Novel vegan meat replacements, completely made of plant-based inputs, emerged as a popular substitute in the 2010s. With sophisticated production techniques making use of haemoglobin and binders to hold ingredients together – extracted via fermentation from plants – they could mimic the sensory experience of meat and even blood.

However, an even more realistic alternative was in development: cultured meat, produced by in vitro cultivation of animal cells. In 2012, Dutch scientists created a rudimentary form of synthetic meat, consisting of thin strips of muscle tissue derived from a cow's stem cells. The following year, they became the first group to produce a complete burger, grown directly from animal cells.

The first lab-grown burger had cost $384,000. This was more than just a novelty, however. Other scientists and companies were beginning to research and develop their own versions. Tens of millions of dollars began to flow into this nascent industry. By the first half of the 2020s, cultured meat was commercially available in a number of restaurants and supermarkets around the world.

As the technology advanced – not only becoming cheaper and easier to utilise, but also expanding the types of cell tissue available – the number of startups began to explode. Meanwhile, larger and more established companies steadily increased their investments.

A variety of cultured meats entered the market – everything from burgers to hot dogs, meatballs, nuggets, sausages and steaks. Production involved many of the same tissue engineering techniques traditionally used in regenerative medicine, such as inducing stem cells to differentiate, with bioreactors to "grow" the foods, and scaffolds to support the emerging 3D structures. By accumulating cells in a strictly controlled environment, the final products could be manufactured in far cleaner and safer ways, free from any harmful organisms.

The introduction of food labels helped consumers to identify synthetic or "clean" meat in shops, as had been done for organic, free range, and certain other classifications in the past. Mainstream adoption occurred in many countries, as these products became cost-competitive. By the late 2030s, the worldwide market for conventional slaughtered meat was being overtaken by the combination of cultured meat and novel vegan replacements. By the early 2040s, cultured meat alone had achieved market dominance, despite attempts by established farming lobbyists to slow progress. New biotechnology methods continued to disrupt not only the meat industry, but the entire food sector as a whole range of synthetic products such as milk, egg white, gelatine and many types of fish could now be created with similar technology.

As the decades went by, the consumption of traditional meat became increasingly taboo – morally equivalent to foie gras, or shark fin soup. This was driven in part by animal welfare concerns, but a greater ethical issue was the climate crisis now rapidly worsening and engulfing much of the world. Cultured meat had the potential to drastically reduce environmental impacts, with 95% lower greenhouse gas emissions and requiring 99% less land.

Having consolidated into a mature industry across the developed world, cultured meat became more widespread in poorer nations during the 2050s. However, traditional meat still accounted for hundreds of billions of dollars in revenue and remained a significant commodity in some regions, with cultural and societal aspects that went beyond mere economics. Although continuing to shrink globally, it now entered an S-curve of slower decline. The industry would survive for another few decades yet.

By the 2080s, the world has changed almost beyond recognition. Biotechnology has swept aside traditional agriculture and its many related infrastructures – rendering vast areas of farmland redundant and providing an opportunity to restore forests, rivers, lakes and other natural features. Many regions have outright banned the slaughter of animals. Most households with at least a middle income or above have access to a kitchen appliance that can replicate meat products and other foodstuffs within a matter of minutes. Many people today look back in horror at the farming practices of the past, which killed upwards of 65 billion animals per year and had gigantic environmental impacts. In 2084, only a negligible percentage of the world's people still raise livestock for meat.

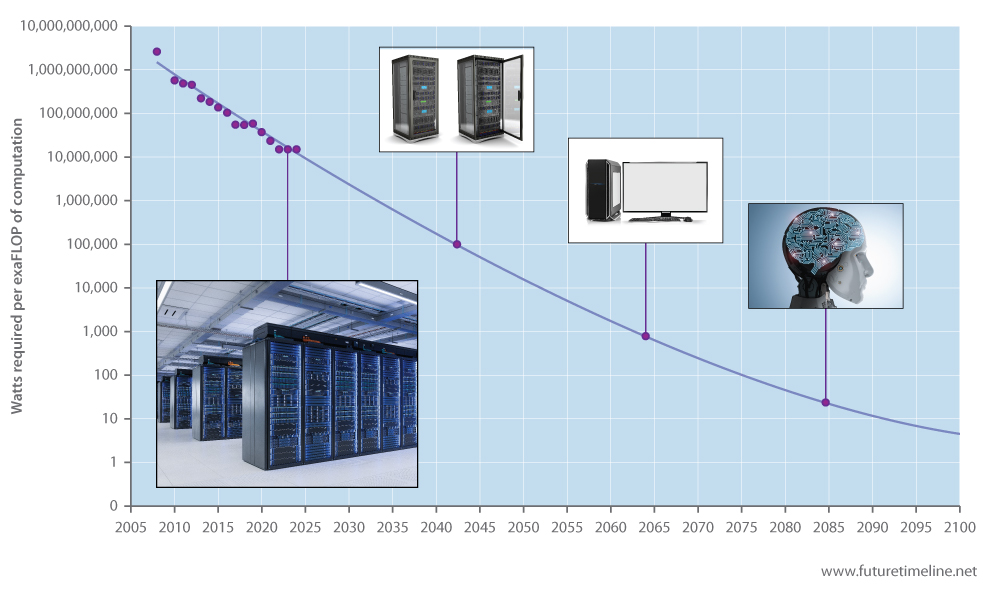

Robotic brains match the energy efficiency of human brains

During the early 21st century, researchers began to prioritise power efficiency as a key factor in the development of computing systems. The growing demand for more powerful technology required innovations in thermal management and energy consumption, both to lower environmental impacts and to prevent overheating in densely packed hardware. As computing power increased exponentially, so too did the need for machines that could process vast amounts of data without consuming unsustainable levels of energy.

Supercomputers at this time were power-hungry behemoths, often consuming tens of megawatts of electricity – equivalent to a small town. The Green500 list emerged in 2007 as an offshoot of the Top500 list of the world's leading systems, based not just on performance, but also energy usage per FLOP (floating point operations per second).

In 2022, Frontier became the first supercomputer to reach 1 exaFLOP, or a million trillion (1,000,000,000,000,000,000) FLOPS when unveiled at Oak Ridge National Laboratory in Tennessee. While this machine ranked second in terms of energy efficiency, a smaller-scale version known as Frontier TDS (test and development system) achieved first place on the Green500, with 62.7 GFlops/watt.

Although biological and non-biological systems differed in their processing of information, research had shown that the human brain possessed a "thinking speed" equivalent to about 1 exaFLOP. Achieving this level of performance in machines marked a particularly notable milestone for Frontier and other high-performance computing systems.

As well as conventional supercomputers, progress in so-called neuromorphic computing played a crucial role in improving energy efficiency. Unlike traditional architectures measured in FLOPS, these brain-inspired systems processed information through spiking neural networks, mimicking the way neurons communicate in the brain. By handling complex tasks with a fraction of the power required by earlier machines, neuromorphic chips helped pave the way for breakthroughs in energy-efficient computing.

These advances in neuromorphic design, alongside improvements in cooling and other techniques, led to increasingly efficient supercomputers. By the 2040s, engineers could achieve an exaFLOP of performance within a single 100-kilowatt (kW) server rack, approximately the size of a wardrobe.

The 2060s marked another pivotal milestone, as exaFLOP performance could now be achieved in desktop machines. This miniaturisation brought immense computing power to homes, businesses, and laboratories, revolutionising fields such as medicine, environmental modelling, and human-computer interfaces. These machines, while still consuming hundreds of watts, were a giant leap forward in efficiency compared to their predecessors.

Today, in 2084, the same processing capacity as a biological human brain – 1 exaFLOP – can be achieved using a mere 20 watts. This trivial amount of power is less than a typical incandescent light bulb.

The packing of so much computation into ever smaller volumes has fundamentally altered society in recent decades, creating what might now be described as a post-Singularity world. Humanoid androids are largely indistinguishable from real people in both appearance and behaviour. Previously confined to boring, dangerous, or undesirable jobs, these machines are taking on increasingly prominent roles in many professions. While this development has caused immense disruption to the global employment landscape, it has also solved many demographic challenges that arose earlier in the century.

As the line between androids and real people becomes ever more blurred, the debate over android rights has intensified in recent years. The latest generation of robots has gained increased legal recognition, with many landmark court cases, resulting in some governments now granting them limited forms of personhood. Some androids possess what seems to be a form of artificial consciousness, raising further ethical questions about their treatment and status in society. As they become more integrated into daily life, the boundaries of human rights and android rights are constantly being tested.

The applications of human-level energy efficiency in computing extend beyond androids. With 1 exaFLOP of computing at just 20 watts, medicine and other fields are being revolutionised too. Brain-computer interfaces, advanced prosthetics, and human augmentation are flourishing as the true age of transhumanism dawns.

Even more speculative applications – such as mind uploading – are beginning to emerge from the realm of science fiction and into serious scientific inquiry. Looking ahead to the 22nd century, the possibilities for integrating human consciousness with machines may lead to new forms of existence, making the distinction between organic and artificial life disappear entirely.

Androids are widespread in law enforcement

Fully autonomous, mobile robots with human-like features and expressions are deployed in many cities now.* These androids are highly intelligent, able to operate in almost any environment and dealing with various duties. As well as their powerful sensory and communication abilities, they have access to bank accounts, tax, travel, shopping and criminal records, allowing them to instantly identify people on the street.

The presence of these machines is freeing-up a tremendous amount of time for human officers. They are also being used in crowd control and riot situations. Equipped with inhuman strength and speed, a single android can be highly intimidating and easily take on many people if needed. Special controls are embedded in their programming to prevent the use of excessive force.

2085

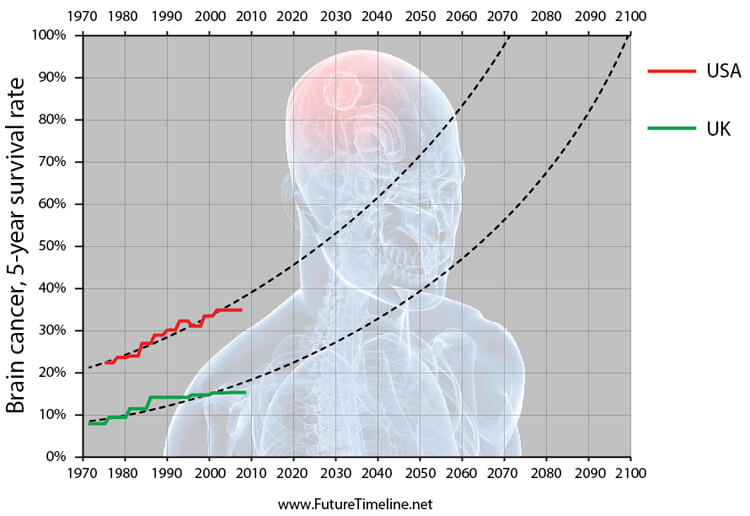

Five-year survival rates for brain tumours are reaching 100%

Because of their invasive and infiltrative nature in the limited space of the intracranial cavity, brain tumours were once considered a death sentence. Detection usually occurred in advanced stages when the presence of the tumor had caused unexplained symptoms. Glioblastoma multiforme – the most common and most aggressive malignant primary brain tumor in humans – had a median survival period of only 12 months from diagnosis, even with aggressive radiotherapy, chemotherapy and surgical excision.

In the 21st century, however, detection and treatment methods improved greatly with nanomedicine, gene therapy and technologies able to scan, analyse and run emulations of complete brains in astonishing detail. Alongside this was the emergence of transhumans who began utilising permanent implants in their brains and bodies, alerting them to the first signs of danger. Towards the end of this century, five-year survival rates for brain cancer are approaching 100% in many countries, the US being among the first.

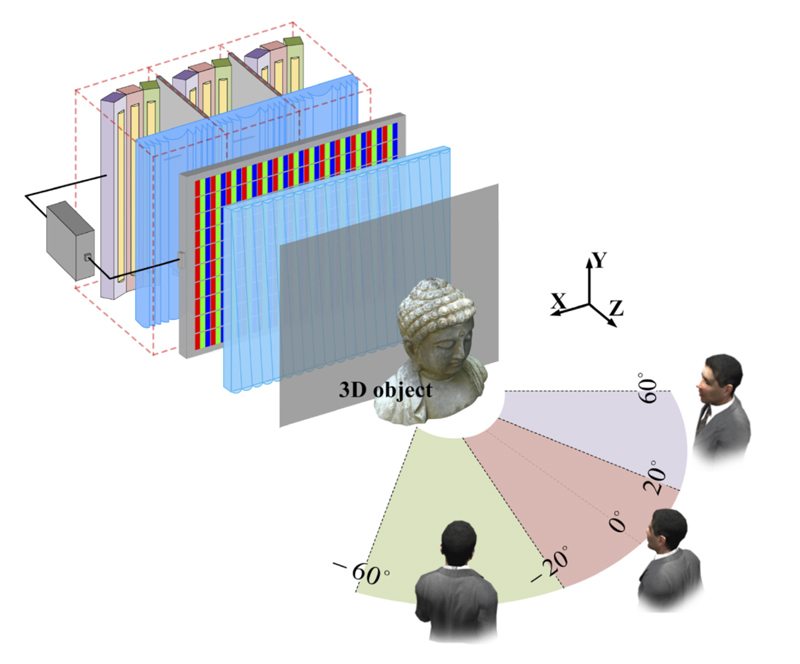

Light field is the latest in display technology

Following the mainstream adoption of multiview (HPO) in the 2040s and volumetric 4K in the 2060s, the next major iteration of display technology is known as light field. This requires another order of magnitude increase in the transmission bit rate.

Whereas earlier displays had only horizontal parallax and a limited viewing angle, this latest generation can reproduce both horizontal and vertical parallax while also providing more view zones. In other words, a fully 3D effect can be seen by many observers at varying positions and whether standing or sitting.

Credit: Adapted from Boyang L, et al. (2019), Time-multiplexed light field display with 120-degree wide viewing angle, Optics Express.

As well as home entertainment systems, light field is used in galleries, museums, and other such venues. For instance, it can generate near-perfect replicas of artworks or relics that might be too rare, fragile, or otherwise inaccessible for the public. Since they are virtual, they can be showcased in many locations around the world simultaneously. Light field can also enhance the shared viewing of content relevant to medical or research settings – biological or chemical structures, for example. The most advanced versions have enough spatial information to project full 360° viewing angles.

This technology is finally perfected in the early 22nd century with the arrival of true holographic displays; yet another order of magnitude increase in the bit rate. Not only do these correct the last remaining flaws in terms of visual realism, they also enable content to be projected outside the limited volume of the display and into the real world.

By FutureTimeLine